Ubiquitous Control of Floating Base Robots: In this work we show the uses of centroid vectoring for attitude control of floating base robots. To derive the control algorithm, we utilize dynamical and kinematic robot model, and ubiquitous Jacobian matrix, which allows us to control the orientation of the main body of a robot by adjusting the control input to its actuators. Controlling the orientation of the main body of a robot is important because it is typically where the sensors, scientific payload, and/or manipulators are attached. The experiments on aerial and underwater vehicles using the same algorithm show how this method can translate across different platforms and actuation methods. We demonstrate this system in simulation and experimentally on real-world robots. These robots include the UAS, μMORUS, and the UUV, AquaShoko, platforms.

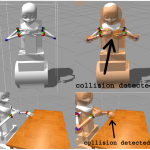

Push Grasping: This project contributes to the work of push-grasping by producing data-driven models on the effects of the chosen angle of approach for anthropomorphic hands. An overview of previous work on robotic grasping is first presented. Thousands of trials of push grasping experiments are then compared between rigid angled funnel configurations coupled with a Baxter Electric Gripper end effector and the anthropomorphic, 16 DOF Allegro Hand end effector, each at different approach angles of approach. Geometrical capture regions for each set of trials are gathered. Push grasping is then applied to the realm of ground vehicles by coupling a mobile manipulator robot and gathering the capture region as the body of the mobile manipulator rotates.

Hotel Room of the Future: Our goal within the “Hotel Challenge” as apart of the IEEE RAS Winter School on Consumer Robotics Workshop was to make the Hotel Room of the Future (HRF) In the “Hotel Room of the Future” we put the guest in the center of all that happens within the room. This means that the guest was the measure for real-time feedback and not a static position in the room. Specifically we made the following: &bull Human in the loop feedback&bull Personalized Temperature control &bull Direct-to-room access &bull Biometric/mood based lighting and music &bull Robotic assistant for room management, companionship, and security. Four examples implemented to show our overall idea. All implementation was done within the four day IEEE RAS Winter School on Consumer Robotics and is only meant as a proof of concept.

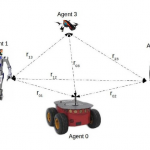

Local Positioning System: Traditionally, localization systems for formation-keeping have required external references to a world frame, such as GPS or motion capture. Humans wishing to maintain formations with each other use visual line-of-sight, which is not possible in cluttered environments that may block their view. Our Local Positioning System (LPS) is based on ultra-wideband ranging devices and it enables teams of robots and humans to know their relative locations without line-of-sight. We demonstrate experimental results of our system by comparing it to a Vicon ground-truth system and by demonstrating that it is suitable for real-time use by allowing teams or swarms of robots to maintain formations with other robots and humans.

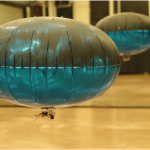

SWARM Control Architecture: This work focuses on the creation of methods and physical software that is capable of 1) sharing state information throughout a swarm, 2) sharing intent between agents, and 3) allow for local or global control. This work is key to our overarching work in swarms and emergent behavior research. The majority of our tests occur on lighter-than-air autonomous agents (LTA3).

Gesture Based Control of SWARM and Multi-Agent Systems: The goal of this work is to determine ways to control a swarm system using a natural human non-verbal interface. Our primary focus is on gestures and how we can uses them to command the overall action space of a swarm of agents. Examples of control may be to “do task x” or “make your formation longer.” A key point is that this should be done by a natural interface and an overarching algorithm that does not utilize “pre-canned” actions.

Utilizing the Gaze Triggering in Human-Robot Interaction: When interacting with others, we use information from gestures, facial expressions and gaze direction to make inferences about what others think, feel or intend to do. Gaze direction also triggers shifts of attention to gazed-at locations and establishes joint attention between gazer and observer. The ability to follow gaze develops early in life and is a prerequisite for more complex social-cognitive processes like action perception, mentalizing and language acquisition. It has been shown that robot gaze induces similar gaze following effects in observers as human gaze, with positive effects on attitudes and performance in human-robot interaction. However, so far most studies have used images or videos in controlled laboratory settings to investigate gaze following in human-robot interaction rather than realistic social embodied robot platforms. We have shown through experiment that gaze following can be observed in real-time interactions with embodied social robot platforms. We continue this work and determine method to utilize this effect. This is apart of our ongoing work on non-verbal human-robot interaction, empathy, and trust in AI.

ThinSat: The overall goal of this project is to design, manufacture, and deploy experiments to automatically occur on small satellites known as “ThinSats.” Our lab’s primary focus on this project is the mechanical design and fabrication of various parts of the ThinSat device.

Underwater Legged Locomotion: When operating a submerged vehicle near the sea floor one of the primary sensors that are used are the cameras. These cameras become obstructed by silt and other debris when the ground is disturbed by the thrust from the submerged vehicles screws/thrusters. In many cases these visual sensors become useless in these conditions. This work strives towards towards creating a robot that can operate near the sea floor while keeping the area silt/debris free and viewable. This is ongoing work and has resulted and is tested on our custom build underwater legged robot “AquaShoko”

Human-Robot Collaboration Using Non-verbal Communication: This work focuses on the implementation of mechanically coupled tasks between a humanoid robot and a human. The latter focus comes from the push for robots to work with humans in everyday life as an overarching goal for the field. Co-robots, or robots that work alongside humans, may be guided by the humans through physical contact, such as the human grasping the robot’s hand to gently guide it along a desired path. This is apart of our ongoing work on non-verbal human-robot interaction, empathy, and trust in AI.

Extending the Life of Legacy Robots:: The goal of this work is to add modern tools to legacy robots while retaining the original tools and original calibration procedures/utilities through the use of a lightweight middleware connected to the communications level of the robot. This system has been tested on the Hubo KHR-4 series and the Dexterous Social (MDS) Robot originally released in 2008. The robot is being actively used at multiple locations including the U.S. Naval Research Laboratory’s Laboratory for Autonomous Systems Research (NRL-LASR).

Creating Trust Between Human and Humanoid: This work progresses towards providing an autonomous dialog via individualized instantaneous tactile interactions with a humanoid robot’s end-effector and a human’s hand. In this work we propose and describe an experiment that explores if this autonomous dialog increases empathy between the human and the robot. We believe that empathy and comradery between and within human-robot teams is the key to co-robotics. This is apart of our ongoing work on non-verbal human-robot interaction, empathy, and trust in AI.

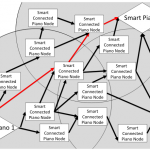

Smart Connected Pianos: This work drives towards an augmented musical reality system that will help increase comradery through musical interaction, with a focus on comradery between people who are separated geographically and who hold different political, social, and financial backgrounds. Specific topics covered in this work are: 1) how to measure comradery and other emotions through musical interaction, 2) methods for audience interaction through reactive audio and visual feedback mechanisms, and 3) methods for keeping the real-time control fast enough, and latency low enough, to allow for musical interaction.

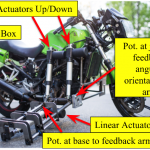

Vehicle Lean Recovery System (VLRS): Motorcycle accidents can cause property damage, bodily injury and even death. The purpose of the vehicle lean recovery system is to introduce a level of safety and security associated with riding motorcycles not previously in place. Three preliminary designs, capable of preventing a motorcycle from rolling over, were developed and tested. These designs were developed by mathematical modeling, 3D simulation and CAD modeling, cost analysis, preliminary calculation of required forces, power requirement, weight of components, and quantitative estimations of feasible range and performance. These quantitative metrics were compared between the various designs and used to create a weighted decision matrix. Finally a prototype was manufactured and tested.

Apparatus for Remote Control of Humanoid Robots (ARCHR):

The Archr team made controllers that were mapped from the controller to the robot. We made a 1 to 1 scale which was Minibot, 44% scaled version of baxter, and a scaled version for Hubo DRC. The Hubo DRC was in a simulation and the controller was real telling the computer where to move the robot.

The Magnetic Resonator Guitar (MR.G):

was inspired by Drexel’s Magnetic Resonator Piano. Much like the Magnetic Resonator Piano, MR.G is a hybrid acoustic-electric instrument. While the sound is processed electronically, the music the guitar produces is entirely acoustic. There are no speakers, with the only amplification being in connection to the electromagnetic actuation.

Android Robot Controller (ARC): The Android Robot Controller is designed to be an easy to use and versatile. The user picks a destination IP address and port and UDP data is sent to that location. The data is consists of 6x button press events, 2x joysticks (x,y) and 1x touch pad (x,y). This systems is designed and tested on a Nexus 7 running Android 4.4. Get the Latest Version of ARC here.

Baja SAE: George Mason University competing in the Baja SAE challenge. Team members consist of Mechanical Engineering, Electrical Engineers, and Computer Scientists. Our mission: to build an ATV-style race car that can handle any terrain, any competition, and any challenge. This is engineering at it’s finest. Please visit the projects website at http://baja.lofarolabs.com/